SALSA LipSync with Dissonance Voice Chat

Overview

![]()

![]() The SALSA and Dissonance teams have worked together to come up with an integration solution that is simple and works great!

The SALSA and Dissonance teams have worked together to come up with an integration solution that is simple and works great!

We will be using Dissonance Voice Chat, SALSA LipSync, and the free SalsaDissonanceLink add-on to create a lip-sync'd voice-chat project. Dissonance supports a wide variety of networking systems, making it super flexible and compatible with nearly any project. In this tutorial, we will specifically utilize Unity's Networking system (as our transport mechanism). Other networking options should work, but have not been tested by Crazy Minnow Studio.

NOTE: This implementation is only a simple example and is not intended as a full-featured solution. Your needs will certainly vary and your implementation will require knowledge of the network and character avatars you choose to work with. Crazy Minnow Studio does not provide implementation support for network chat or model avatar systems outside of SALSA Suite configuration options. While the SalsaDissonanceLink add-on should provide necessary link-up configuration for SALSA and Dissonance in your configuration of choice, it may require modifications if the core integration dynamics change with your implementation of Dissonance beyond the HLAPI setup.

Also, in some configurations, it may be possible or desirable to allow SALSA to perform its own audio analysis calculations and this may be possible in future Dissonance implementations by connecting SALSA to an appropriate network-serialized AudioSource. For now, the simplest integration is to let Dissonance feed its own audio calculations to SALSA.

Support

We (Crazy Minnow Studio) are happy to provide SALSA LipSync Suite-related support. However, we do not provide support for third-party assets. If you have problems getting the third-party asset working, please contact the asset's publisher for assistance.

NOTE: Please remember, the source is included for this free add-on and this should be considered example code that you can use to jump-start your project. It is not intended to fit all scenarios or requirements -- you are free to update it as necessary for your needs. We do provide limited support for all of our products; however, we do not make development changes to support specific project needs.

For SALSA LipSync Suite-related support, please email (assetsupport@crazyminnow.com) the following information:

- Invoice number (support will not be provided without a Unity Invoice Number).

- Operating System and version.

- SALSA Suite version.

- Add-on version (generally located in an associated readme or the script header comment).

- Full details of your issue (steps to recreate the problem), including any error messages.

- Full, expanded component screenshots (or video).

- Full, expanded, associated hierarchy screenshots (or video).

- Super helpful: video capture of issue in action if appropriate.

Requirements for This Example Implementation Tutorial

The SALSA and Dissonance asset systems have been updated to be compatible with each other for voice-chat lip-synchronization. As such, you will need to get the latest versions of SALSA and Dissonance to ensure a smooth experience:

- SALSA LipSync v2.0.0+

- Dissonance Voice Chat v6.4+

Please refer to the readme file included with the Dissonance Voice Chat asset and the Dissonance documentation.NOTE: Dissonance requires Unity version 2017.4+.

- The latest UNet (HLAPI) integration package (provided by Dissonance). Download link is provided by Placeholder Software via in-editor help panel.

- The free SalsaDissonanceLink v2.0.0 add-on -- see release notes below.

- A known working microphone.

- [optional] boxHead.v2 model, included in the core SALSA LipSync Suite asset.

ATTENTION: These instructions require you to download and install the appropriate add-on scripts into your Unity project. If you skip this step, you will not find the applicable option in the menu and/or component library.

NOTE: While every attempt has been made to ensure the safe content and operation of these files, they are provided as-is, without warranty or guarantee of any kind. By downloading and using these files you are accepting any and all risks associated and release Crazy Minnow Studio, LLC of any and all liability.

Installation

NOTE: For information on how to import/install Unity AssetStore packages or unitypackage files, please read the Unity documentation.

-

Import SALSA LipSync into your project and please familiarize yourself with SALSA using the online documentation for SALSA LipSync.

-

Import Dissonance Voice Chat and familiarize yourself with Dissonance using the Dissonance Voice Chat - Getting Started guide.

-

For this tutorial, we are only importing the UNet_HLAPI integration add-on (refer to the UNet_HLAPI Getting Started guide).

-

After both SALSA and Dissonance have been imported into your project, download the SalsaDissonanceLink add-on and import it into your project.

NOTE: You will need to provide the Invoice Number you received when you purchased SALSA LipSync Suite to download the add-on. (Your invoice number may be the same for both products (SALSA and Dissonance) if they were purchased together.)

NOTE: While every attempt has been made to ensure the safe content and operation of these files, they are provided as-is, without warranty or guarantee of any kind. By downloading and using these files you are accepting any and all risks associated and release Crazy Minnow Studio, LLC of any and all liability.

Usage: Setting up Voice-Chat and Lip-Sync

There are three basic steps for configuration:

- Create a spawnable prefab for the player characters.

- Create the Dissonance manager object.

- Create the Unity Networking manager object.

After all of the pieces are created, we can test our scene.

Build a Player Prefab

To properly use character models in a networked setting, the models need to be spawned/instantiated at run-time (after the network services have started). Unity Networking provides a simple, no-frills interface to spawn characters into a network-connected scene upon the client's connection to the server. The following steps demonstrate creating a prefab from the SALSA boxHead character (ensure you have already installed SALSA, Dissonance, and the SalsaDissonanceLink add-on -- see Requirements and Installation steps above):

- Drag the boxHead model into a blank scene.

Add the following components to the new player-prefab object:

* Add Salsa from the component menu: Crazy Minnow Studio > SALSA LipSync > SALSA

> Remember: It is necessary to configure SALSA. For this boxHead implementation, we can simply apply the boxHead OneClick. Additionally, some character models, such as FUSE, iClone, DAZ, UMA, etc. will require a different setup. Please review and implement the respective one-click or other configuration methods for your model type.

-

SALSA v2 requires no AudioSource be configured and "Use External Analysis" needs to be enabled. Dissonance will feed analysis calculations to SALSA.

-

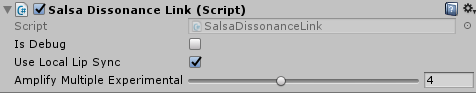

Add SalsaDissonanceLink from the component menu: Crazy Minnow Studio > Addons > SalsaDissonanceLink.

- The dynamic between SALSA and Dissonance has changed with respect to the analysis calculations. We have added an experimental feature to help compensate

Amplify Multiple Experimental. In our testing, setting this value between 3 and 4 produces good results. It will, of course, depend on your microphone implementation and Dissonance settings.

- The dynamic between SALSA and Dissonance has changed with respect to the analysis calculations. We have added an experimental feature to help compensate

-

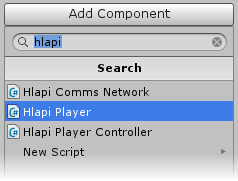

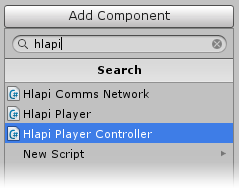

Add a Dissonance Voice Player from the project list (remember, we are configuring a UNet system), navigate to: Dissonance > Integrations > UNet_HLAPI > HlapiPlayer and drag it to your player-prefab:

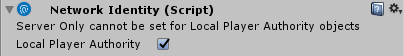

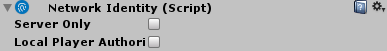

> NOTE: Adding the Dissonance Voice Player should automatically add a Network Identity component as well. If it does not, you will need to add it manually. From the component menu, select: Network > Network Identity.

IMPORTANT: EnableLocal Player Authorityon this player prefab Network Identity.

-

Optional Components: (Required for the tutorial)

The following components are not necessary to establish voice-chat or lip-synchronization; however, the new Eyes module will give the character more life and the player controller will allow us to position our players for better viewing. NOTE: this tutorial assumes the following are added and configured. -

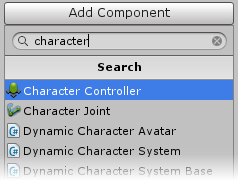

Add a player movement controller: we added the Unity Standard Assets Character Controller for movement.

-

Add the Dissonance HLAPI Player Controller.

-

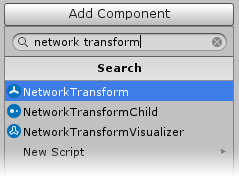

Add a UNet Network Transform to support the player controller; otherwise, movement will not be translated across the network.

Remember: Eyes also needs to be configured for your model. Or for simplicity, you can configure it later.

-

Once configured, our player-prefab components look like this (screenshot)

-

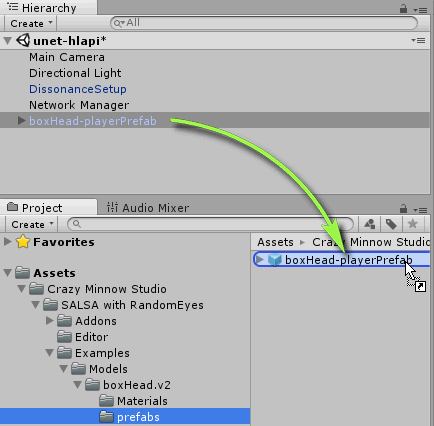

Create your player-prefab by dragging the scene hierarchy object to the Editor Project list (we saved our prefab in a prefabs folder under the boxHead model). Note: follow the specific directions for your network system -- it may be required to place the prefab in a

Resourcesfolder.

Setup Dissonance

The Dissonance website has an excellent collection of documentation. Specifically, there is a quickstart section on setting up a Unity Networking scenario.

-

Drag the DissonanceSetup prefab into the scene. Learn about Dissonance Comms.

- From the project list, navigate to: Dissonance > Integrations > UNet_HLAPI > DissonanceSetup.

-

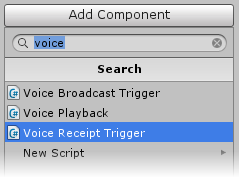

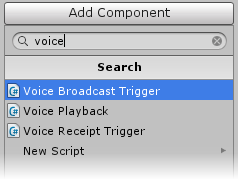

Add the following components to the DissonanceSetup scene object (created in the previous step):

-

Add a UNet Network Identity from the component menu, select: Network > NetworkIdentity.

> NOTE: This component does not have either option enabled.

-

Add a Dissonance Voice Receipt Trigger from the project list: Plugins > Dissonance > VoiceReceiptTrigger. Learn more about the Voice Receipt Trigger

NOTE: Set the chat room to

Global.

-

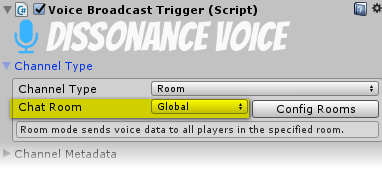

Add a Dissonance Voice Broadcast Trigger from the project list: Plugins > Dissonance > DissonanceBroadcastTrigger. Learn more about the Voice Broadcast Trigger

NOTE: For this demo, we will configure two options.

-

First, set the chat room to

Globalin the Channel Type section.

-

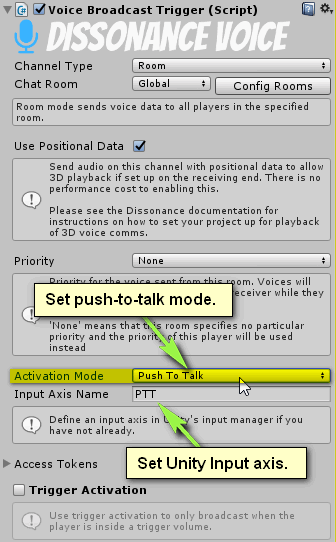

Second, set the

Activation Modeto 'Push To Talk'. This may not be desirable for your project; however, this setting helps us test the project using a single computer.

-

-

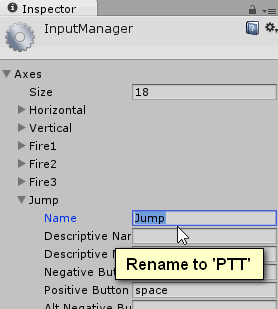

Also configure a Unity Input axis as "PTT" (we simply changed the Jump axis [space] to PTT). [Edit > Project Settings > Input]

-

Once configured, our DissonanceSetup object's components look like this (screenshot).

Setup Unity Networking

Setting up a 'Network Manager' is pretty flexible. We will add a new root object and call it 'Network Manager'. Confirm your components resemble ours.

-

Create a new empty in the scene and rename it to 'Network Manager'

-

Add the following components to the Network Manager object:

-

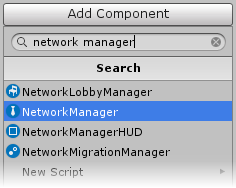

Add a Network Manager from the component menu, select: Network > NetworkManager or add the component from the inspector.

-

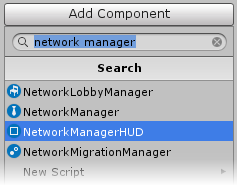

To more easily facilitate starting and joining a server, we added a NetworkManagerHUD. From the component menu, select: Network > NetworkManagerHUD or add the component from the inspector.

-

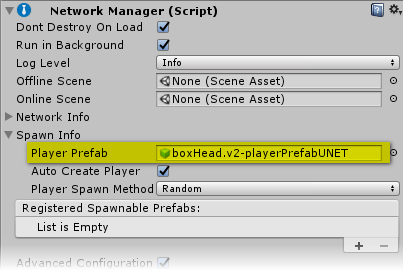

Configure the UNet Network Manager:

- Link the player prefab object we saved in the project list to the Network Manager's Spawn Info >

Player Prefabslot.

-

Additionally, enable Advanced Configuration and confirm there are 2 QoS channels.

- Channel 0 = reliable

- Channel 1 = unreliable

- Link the player prefab object we saved in the project list to the Network Manager's Spawn Info >

-

Once configured, our Network Manager object components look like this (screenshot).

Build and test

To test, we will need to create and run a build of our project.

- Save your scene and project.

- Ensure the project is not set to

Run in Background. Edit > Project Settings > Player Settings - Open the Build Settings:

- Click the

Add Open Scenesbutton. - Click the

Build and Runbutton

- Click the

- Once the app runs, connect and serve as the host.

- Next, run the application in the Editor and connect as the client (either build can operate as host or client).

NOTE: You will most likely need to adjust the positioning of your character models as they will likely spawn on top of each other.- If you added the optional Player Controller components, you can maneuver one or both avatars into more advantageous positions.

- If you did not add the component, you can switch to scene mode in the editor and manually move one or both models.

- Ensure one of the windows has focus and press the button you configured in the Unity Input Manager as the PTT button.

- Speak into your microphone and the remote player in the other window should lip-sync to your voice.

API Information

SalsaDissonanceLink is a very simple linkage component. As such, there is zero configuration for the component itself (except for enabling local lip-sync). Under the hood, there is a single public property that may be of interest to developers.

public float Boost //min 0, max 1

This value allows you to set a non-clipping amplification level. The value is represented as a normalized, "more-is-more" setting (0 being no amplification and 1 being full Boost).

IMPORTANT: A setting of 1 will modify the data being fed to SALSA as a constant stream of max value, meaning your model's mouth will be wide open constantly, even if there is no audio signal. The setting defaults to .65f and is a practical value in our testing. Your particular needs or scenario may have different requirements.

NOTE: this is a (run-time) programmatic setting and cannot be set in the Editor (design-time).

Troubleshooting and Operational Notes:

- No known issues.

Release Notes:

NOTE: While every attempt has been made to ensure the safe content and operation of these files, they are provided as-is, without warranty or guarantee of any kind. By downloading and using these files you are accepting any and all risks associated and release Crazy Minnow Studio, LLC of any and all liability.

v2.0.0 (beta) - (2019-06-22):

+ Initial release for SALSA LipSync v2.